Articles in this series:

Meeting client requirements with SharePoint often involves aggregating items somehow – often we want to display things like “all the overdue tasks across all finance sites”, or “navigation links to all of the subsites of this area” or “related items (e.g. tagged with the same term)” and so on. In SharePoint 2010 there have been two main ways of accomplishing this:

- Content Query web part

- Custom solution built on SPSiteDataQuery (site collection-scoped), SPQuery (list-scoped) or search API

To a lesser extent, using the search web parts as part of a custom solution may also have been an option. Regardless, it was common to need custom code to meet such requirements. Maybe we needed to add paging to the results, or we needed to use some value obtained dynamically through code (e.g. from the current site/current page/current user/something else) – several Codeplex solutions arose from this gap, and

lots of lines of code were written.

SharePoint 2013 presents the Content Search web part as a new option – it’s capabilities mean that simply using the web part (with some front-end work to meet look and feel requirements) will meet many needs,

withoutuse of custom code. If you’re a developer, the following screenshot should give you a clue as to why code won’t be required too often (with one of my favorite options highlighted):

It’s incredibly powerful

It’s incredibly powerful, and it’s a good idea to understand what it can do.

Understanding the deal with search-based solutions

As the name suggests, the Content Search web part is powered by SharePoint’s search function. As such, there are the following considerations:

- The CSWP can be configured to “see” items anywhere in SharePoint (potential advantage)

- In contrast, the CQWP and related SPSiteDataQuery can only search within the current site collection - the site collection “boundary” is a factor

- Results shown are not guaranteed to be 100% up-to-date (potential disadvantage)

- Since a search crawl has to run before any content changes will be shown in search results (remember this can include titles, summaries, images and so on for pages/documents), if a user creates/edits an item it will not be shown immediately. This can be a critical point.

- Furthermore, my understanding from a FAST engineer is that in SharePoint 2013 there is no longer any means of pushing a document directly into the search index – in previous FAST incarnations including FAST for SharePoint 2010, there were options such as docpush.exe for “proactively” add an item to the index, rather than waiting for the next search crawl.

- That said, it should be possible to obtain much lower indexing latencies in SharePoint 2013 via the “Continuous Crawl'” capability. In most deployments, my guess would be that changes would be reflected within a few minutes at most if this is enabled (where previously you may have had an incremental crawl scheduled every 15, 30 or 60 minutes for a SharePoint sites content source.

Summary - if the functionality you are creating needs fully up-to-date results (e.g. a user has created/edited something and it needs to be *immediately* reflected in the site) then you will probably need to stick with the original approaches (i.e. a query-based rather than search-based solution).

Terminology – new concepts in SharePoint 2013 search

So if we’re going to build solutions built on SP2013 search, we need to have a basic understanding of some concepts – we’ll run into these time and time again:

Concept

|

My quick definition

|

| Result Source | Like a search ‘scope’ in SP2007/SP2010, but on steroids. Rules are specified to say what the scope consists of – e.g. DOCUMENTS in my TEAM SITES area (constraining on content type and path in this example).

Created centrally, or at the web level. Result Sources can be used in just about any search-related functionality, including the Content Search web part. |

| Query Rule | Like a ‘best bet’ on steroids. Ability to do specially formatted results at top of results list (e.g.Promoted Result) for highly-recommended content. In addition to Promoted Result, we can also do a Result Block (example could be a block of 5 image results within main list of text links).

Another option is to Change the Ranked Results – i.e. put something at the top, promote ordemote something by 1-10 (previously known as a ‘boost’ in FAST)

LOTS of flexibility in matching the user’s query, including regular expressions and matching terms in the Managed Metadata store. |

| Display Templates | A Display Template is a JavaScript template (similar to jQuery templates) which controls formatting – in the case of the CSWP, this effectively replaces the use of XSL for look and feel. There is a separate template to pick for the overall control and formatting of an individual item. The .js files for the templates are stored in the ‘Content Web Parts’ subfolder of the Master Page Gallery.

Side note – in the context of a search results page (rather than CSWP), a Display Template is associated with a Result Type (e.g. Word doc, wiki page, PowerPoint file etc.) and so we have granular control over how each is displayed (and when). Extremely cool. |

So, lots of flexibility in the search infrastructure. Let's see some of this in the context of the Content Search web part.

Configuring the Content Search web part

There are two main aspects to this:

- Displaying the right items (Search Criteria)

- Look and feel (Display Templates)

In terms of the search criteria, there is *enormous* flexibility in what the CSWP - and the underlying search capability - can do. For one thing, it’s possible to either directly configure the query entirely in the properties of this web part instance (e.g. show me all documents which meet criteria X), and/or start from a pre-existing Result Source to do some of the filtering. Combining the approaches will be fairly common – an example could be “search only on wiki pages” (an OOTB Result Source) but only show items tagged with X (this defined directly in the CSWP properties).

Interestingly, configuring a centralized Result Source and a Content Search web part on a page are very similar, even though it would seem some sort of “reusable scope” and a web part are very different things in SharePoint. The overlap comes because underneath both there is a search query which does the work of isolating the desired results - indeed, as we'll see later the same “Query Builder” UI is used in both places (with a couple of minor differences). So, if you’ve learnt how to configure a CSWP you’ve essentially also learned how to create a custom Result Source.

Configuring the web part

The first thing to understand is that the Content Search web part appears in different guises in the web part gallery. The ‘main’ web part is in the ‘Content Rollup’ category:

But there are also many

pre-configured versions available, each of which finds a specific type of content. This is great for end-users who don’t necessarily

think in terms of needing a ‘Content Search’ web part:

And just to prove the point, the web parts above correspond to the following .webpart definition files in the Web Part Gallery:

Once the web part has been added to the page, it can be configured by it’s tool pane. The main configuration item is the query to use, and this can be started by clicking the ‘Change query’ button:

This opens the "’Build Your Query” dialog – this has tabs labeled BASICS, REFINERS, SORTING, SETTINGS and TEST. This thing is known (unsurprisingly) as the

Query Builder – what you might not realize, is that it’s used in several places in SharePoint 2013:

- Configuring a Content Search web part (obviously)

- Creating a Result Source (specifically in the Query Transform section)

- Configuring a Search Results web part

There are

some differences – for example, when configuring a Search Results web part there is no SORTING tab because this will be handled in the Result Source or the query.

I’m going to talk about things from the perspective of the Content Search web part, but will call out any differences for the other usages – so hopefully by learning the CSWP, you also get to learn 75% of the search infrastructure.

BASICS tab – Quick Mode

Although the first tab is labeled ‘BASICS’, I’d say it’s actually the most involved - this is where the query itself is configured, and there is a ‘Quick Mode’ and ‘Advanced Mode’. You’ll also notice that - and let me just say I’d personally be willing to give the Product Manager for this feature A BIG HUG for this - that there’s a “live” results preview pane, permanently visible on the right-hand side of the Query Builder. This shows

the first 10 results which would display from running the currently configured search against the current index,

without the need to save the web part after each change:

Note that if you create your own query, then this preview pane is only able to show results when you are on the TEST tab. And we’ll talk about that towards the end.

Let’s now walk through the various configuration steps in here.

Select a query

In Quick Mode, the dropdown contains the Result Sources (see my definition above if you’ve forgotten already :)) which come out-of-the-box with SharePoint 2013 – one of these may provide a good foundation for what you need:

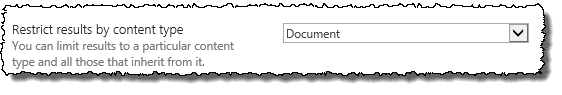

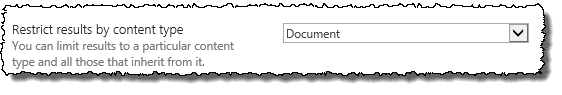

As you select a Result Source from the dropdown, other options may become available lower down. So if I want to find items matching a specific content type, I get this:

In fact, this option to restrict by content type appears for

many of the pre-defined Result Sources, not just “Items matching a content type” – which makes sense, because it’s a common thing to include as a filter. Similarly, “Items matching a tag”

and several other queries give this interface for selecting a tag to filter on:

And, happy days, if I specify the tag by typing one I get auto-complete to help me pick the term – this is a fully-fledged Managed Metadata input field. Consequently there’s also full validation of the terms you type-in (though this takes a few seconds to show), so if an author accidentally enters something which isn’t a known term, he/she should spot the mistake immediately:

Consider also that those middle options of using the navigation term associated with the current page is

exactly what’s needed to build many types of ‘related items’ functionality – again, no code needed now.

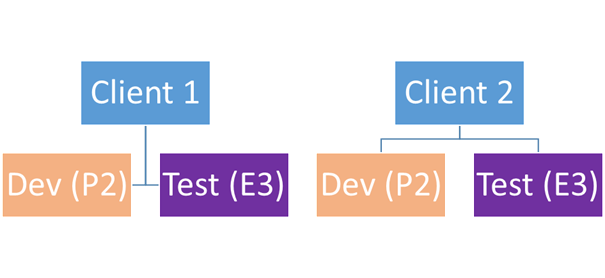

Restrict results by app

In the next section, I can restrict the scope of the results to a particular location (e.g. the current site). This enables me to get something like the Content Query web part behavior of

only searching within the current site collection if needed – because although we now have the power, it won’t always make sense to go across the entire farm :)

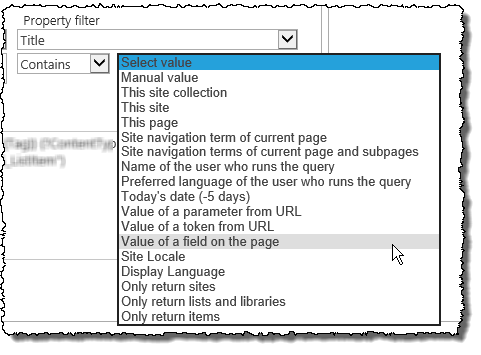

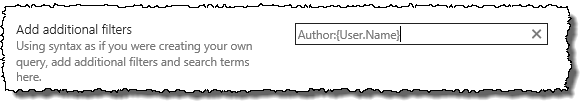

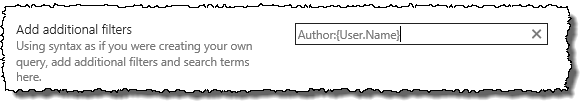

Add additional filters

In the next section I can supplement the query with any valid query text, e.g. a property filter. In this example, I’m adding a filter to only present items which were

created by the current user:

Sort results

When we scope our query to a pre-defined Result Source (as we are here in the CSWP ‘Quick Mode’), then sorting is usually pre-defined at that level. The CSWP does give us the opportunity to override sorting based on based on some popularity ranking models (around most viewed/most clicked) instead though – expect proper wording to appear in this dropdown in the RTM version, but you get the idea:

So what happens if none of the options presented so far do what you want? An example could be wanting to use an existing Result Source (e.g. ‘wiki pages’) but sort on Last Modified in descending order. Obviously the dropdown above does not allow that. We

could create a custom Result Source and implement the query/sorting there, but that only really makes sense if we expect it to be re-used in multiple places.

In these cases, we can click into Advanced Mode (still on the BASICS tab).

BASICS tab – Advanced Mode

In Advanced Mode you basically get to specify the full query text yourself. In my mind, this is like building a solution with the search API in SP2007/SP2010 – I saw many custom solutions (and built several myself) which used the

FullTextSqlQuery or

KeywordQuery classes to find the right items. SharePoint 2013 makes it much easier to have this full control whilst still piggybacking onto the out-of-the-box web parts – meaning less work and more productivity.

When switching to the Advanced Mode, a couple of things become available:

- A SORTING tab (details later)

- Controls to help you build the query (which you’d previously do essentially by hand in earlier versions), with ‘Keyword filter’ and ‘Property filter’ options. These can be combined as you like, and the resulting query text appears in the textbox at the bottom:

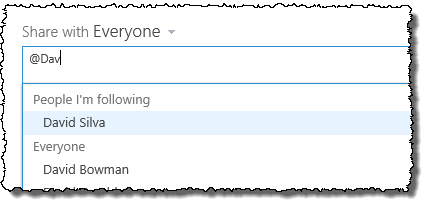

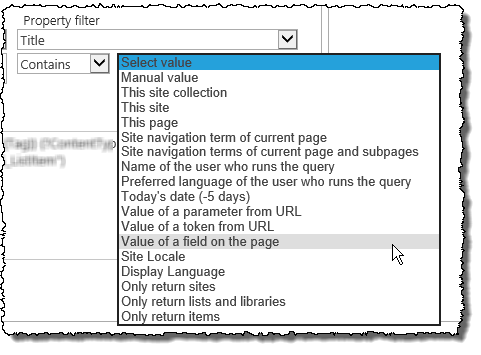

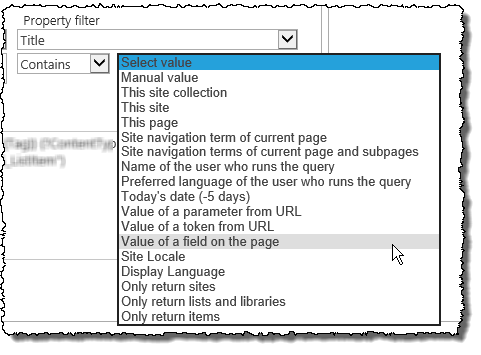

Avoid custom code by using tokens

There are many

tokens which can be used when building a query in this way – often you might want to pass something into the query, such as a URL (querystring) parameter, the value in a particular field on the page, and so on. Being able to do this unlocks a

huge range of possibilities for building solutions. This is where the first image in this article comes from – here’s a reminder:

In summary, when using the Advanced Mode of the query builder you should be able to target just about any content in your SharePoint environment.

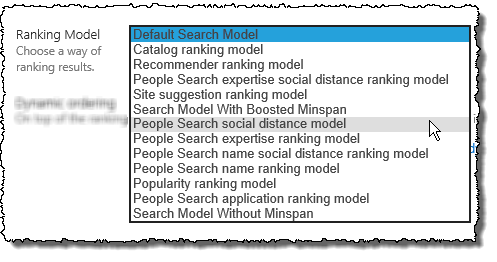

SORTING tab (Advanced Mode only)

In SharePoint 2010 Enterprise Search, you could only sort by relevance/rank (the normal search engine approach) or date. FAST for SharePoint 2010 had more options (you could sort by a Managed Property). In SharePoint 2013, frankly the sort options alone are enough to blow your mind :) If you don’t need anything specific around sorting then you can skip this bit, but if you do then here are your options:

First you can sort by way more things than just rank and date:

One thing to note there – I’m unclear as to what makes it into that ‘Sort by’ list and what does not. It’s not Managed Properties as far as I can tell, so although the list is long many options may not be hugely useful. Still, better than before.

Usefully, you can now do multi-level sorting (sort by this, then by that). The ‘Add sort level’ link in the image above adds another row, allowing me to do things like sorting by URL depth (so items higher up in the site hierarchy show at the top), and

then by rank (that makes sense, because there’ll be lots of items at the same URL depth so I do need two levels of sorting):

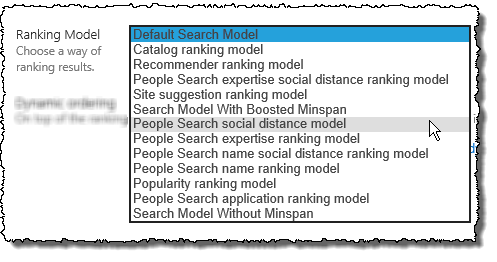

Note that effectively what I’m doing here is building some sort of custom ranking model. This works great if I need something very specific on sorting, but also note SharePoint 2013 comes with several ranking models – the next section allows me to pick from these

if I’ve left the ‘Sort by’ dropdown on ‘Rank’, unlike in the image above. This is because all these options are effectively

different forms of rank – most are around People Search or popularity:

And for those occasions when the client is telling you that his/her strategic document

really has to be on page 1 of the results (but not a Promoted Result/best bet), you have ‘Dynamic ordering’ – you can boost/demote results, including the option to promote to the top:

REFINERS tab

In the context of search, refiners are

usually the links on the search engine’s results page (typically in the left nav) which allow the user to further filter the results. So if I do a search for “meeting minutes” and get lots of results, it would be nice to be able to filter by, say:

- Date range

- SharePoint site (since minutes might be stored in individual project sites)

- Author

- ..and so on

However, in the context of the Content Search web part, refiners actually allow you to do this filtering as part of the initial query. The REFINERS tab is effectively a convenience to you, the person configuring the web part – what happens is that a search is performed whilst in edit mode, and all relevant refiners (e.g. managed properties) are presented as available refiners. These can be selected and moved over to the right-hand list:

The effect of this is that a further filter is added to my query. In the example above, this may be easier than using a Property Filter on the BASICS tab – since there I have little support, I just select the property and type the value:

In the REFINERS tab, SharePoint is doing the search for me (as it’s configured so far), and only coming back with values

which have been found in the returned results.

SETTINGS tab

The SETTINGS tab controls some high-level options for running the search:

Query rules

Since these can be defined at the parent site

or search service, it could be the case that your CSWP gets affected by one of these. As the radio button shows, this can be overridden, but consider that some types of Query Rules may not have an effect anyway - as a reminder (from the table at the beginning), a Query Rule can either:

- Add a promoted result

- Add a result block

- Change the ranked results somehow (by modifying the query)

Out of these 3 actions, 1.5 of them could affect the results of a ‘default’ CSWP. This can be summarized:

Query Rule Action

|

Will affect CSWP results?

|

| Add a promoted result | Not by default. When a search runs in SharePoint, multiple result sets are returned (e.g. ‘main results’, ‘best bet results’ and so on - in SP2013, the real names for these are ‘RelevantResults’, ‘SpecialTermResults’, ‘PersonalFavoriteResults’ and ‘RefinementResults’.). Although a CSWP can be configured to show any table, the default is ‘RelevantResults’ – and a promoted result gets added to ‘SpecialTermResults’. |

| Add a result block | Yes if result block is configured to show ‘ranked within core results’ (the default), rather than ‘shown above core results’. |

| Change ranked results | Yes. |

For completeness, here’s the place in the CSWP where you select which search result set to use (e.g. if you want to switch from the default of ‘RelevantResults’:

|

Options in the Results Table dropdown (shown to the left):

|

URL rewriting

This one is fairly simple – if results are being returned from a catalog which is using “friendly” URLs, then the CSWP can override this to use the original URLs. It may not always make sense to use rewritten URLs in aggregations outside of the catalog pages, especially if you’ve implemented anything funky there.

Loading behavior

This is useful – specify whether the CSWP web part instance should load in the main page load (default) or in an AJAX manner after the main page has finished. Considering that a CSWP could either be the centerpiece of your landing page or merely some page footer navigation, it’s nice to be able to prioritize in this way.

Priority

Similarly, we can actually specify High, Medium or Low priority for each CSWP instance we use – great for the different usages we will have, although as per the description, note this only has any effect if the search service is overloaded.

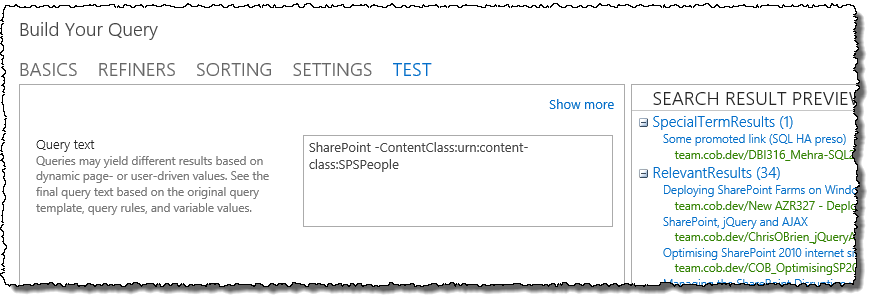

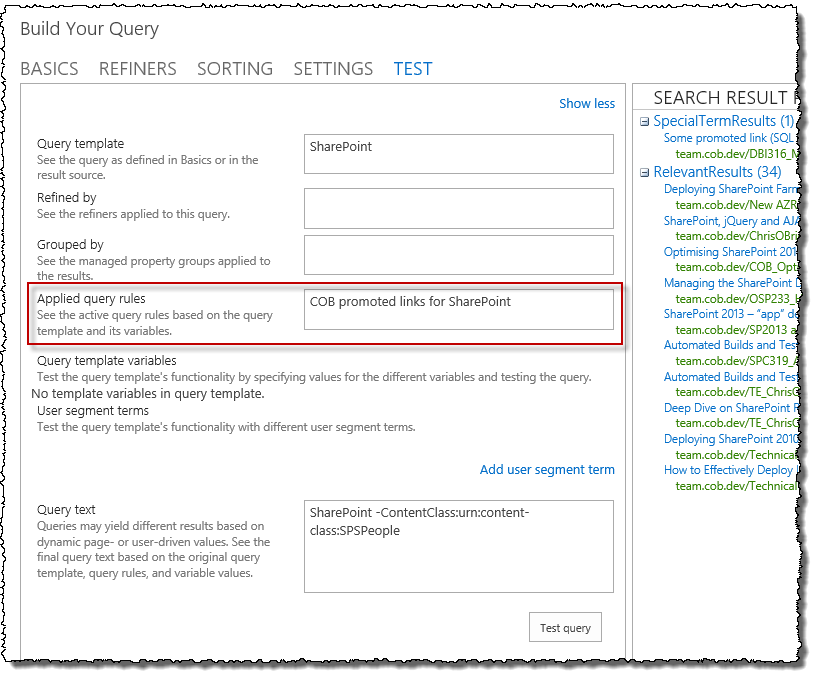

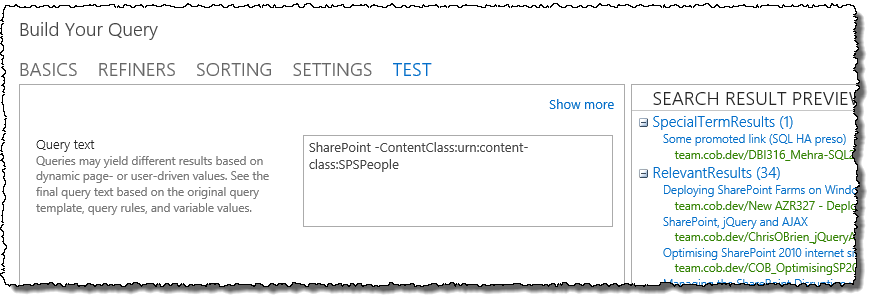

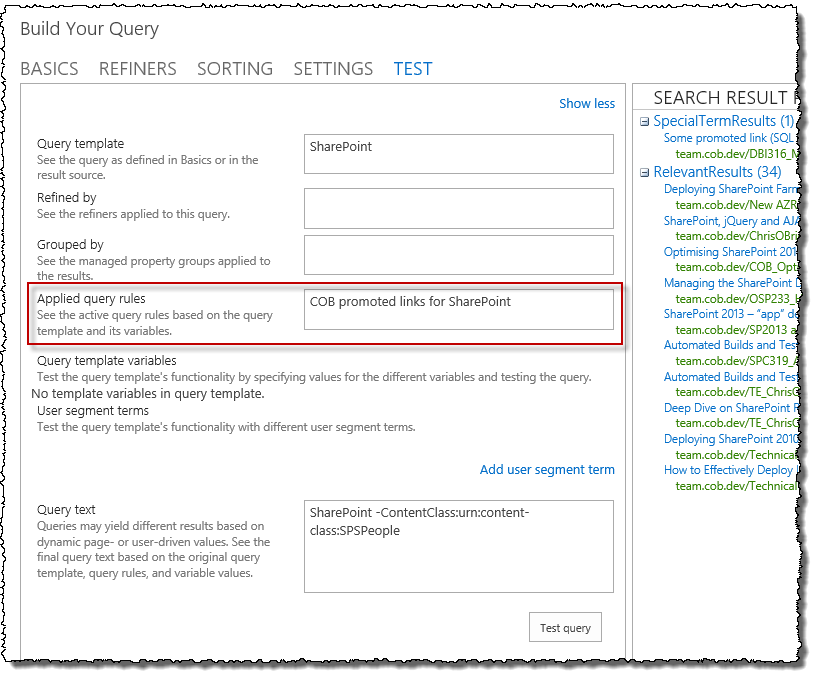

TEST tab

The TEST tab is hugely useful – it provides you the ability:

- To see the underlying query text (in Keyword Query Language [KQL]) which has been generated (though it must be edited in other tabs)

- To see the preview when you are defining a query yourself (the preview pane will be empty on other tabs in this scenario)

Which is all great, but at first glance it’s easy to miss some extra functionality –

if the ‘Show more’ link is clicked, other information becomes visible including details on any refiners and Query Rules which have been applied. So below I can see that a custom Query Rule I created has indeed been used, so there’s no guesswork on (for example) whether a certain item is actually being promoted or not:

Sidenote - listing items from ONE site/list/library with the Content Search web part

Worthy of a quick note - if all you need to do is roll-up content from one list/library, then you *can* do this with the CSWP – in the query, simply restrict the search using PATH:[URL to document library]. The Query Builder UI helps you do this by providing the ‘Restrict by app’ area:

N.B. note that one potential gotcha here can be that you need ‘HTTP’ if your sites are browsed on HTTPS but crawled on HTTP (as in my case).

If you

do want to filter by site/list/library, consider of course that the good ol’ Content Query web part will work just fine here, *and* you’ll get instant changes as content is changed. What you won’t have, is the Content Search Web Part’s ability to automatically use tokens in the query (e.g. value of current navigation category, value from current user’s profile etc.)

Summary

The Content Search web part is a great tool in the SharePoint consultant’s box of tricks. Configuration may prove quite simple for some scenarios, but there is also huge amount of flexibility and so a certain degree of complexity comes with that. Many advanced scenarios which make use SP2013 search capabilities (such as Result Sources, Query Rules, promoted results and so on) will be possible – knowing the details will help you identify whether the CSWP can be the answer to a particular problem or not.

In this post, we looked simply at “displaying the right items” i.e. the search query aspect. In other posts, I’ll talk about:

- Displaying items

- CSWP tips

- How to implement for 5 key scenarios

- A simple decision process for working with the CSWP